2024 was a busy yr for lawmakers (and lobbyists) involved about AI — most notably in California, the place Gavin Newsom signed 18 new AI laws whereas additionally vetoing high-profile AI legislation.

And 2025 may see simply as a lot exercise, particularly on the state stage, in response to Mark Weatherford. Weatherford has, in his phrases, seen the “sausage making of coverage and laws” at each the state and federal ranges; he’s served as Chief Data Safety Officer for the states of California and Colorado, in addition to Deputy Beneath Secretary for Cybersecurity beneath President Barack Obama.

Weatherford mentioned that lately, he’s held totally different job titles, however his function normally boils all the way down to determining “how can we elevate the extent of dialog round safety and round privateness in order that we will help affect how coverage is made.” Final fall, he joined artificial information firm Gretel as its vp of coverage and requirements.

So I used to be excited to speak to him about what he thinks comes subsequent in AI regulation and why he thinks states are prone to paved the way.

This interview has been edited for size and readability.

That aim of elevating the extent of dialog will most likely resonate with many people within the tech trade, who’ve perhaps watched congressional hearings about social media or associated matters previously and clutched their heads, seeing what some elected officers know and don’t know. How optimistic are you that lawmakers can get the context they want in an effort to make knowledgeable selections round regulation?

Properly, I’m very assured they’ll get there. What I’m much less assured about is the timeline to get there. You already know, AI is altering each day. It’s mindblowing to me that points we had been speaking about only a month in the past have already advanced into one thing else. So I’m assured that the federal government will get there, however they want individuals to assist information them, workers them, educate them.

Earlier this week, the US Home of Representatives had a job drive they began a few yr in the past, a job drive on synthetic intelligence, and they released their report — nicely, it took them a yr to do that. It’s a 230 web page report; I’m wading by means of it proper now. [Weatherford and I first spoke in December.]

[When it comes to] the sausage making of coverage and laws, you’ve acquired two totally different very partisan organizations, they usually’re attempting to return collectively and create one thing that makes all people glad, which suggests every little thing will get watered down just a bit bit. It simply takes a very long time, and now, as we transfer into a brand new administration, every little thing’s up within the air on how a lot consideration sure issues are going to get or not.

It seems like your viewpoint is that we might even see extra regulatory motion on the state stage in 2025 than on the federal stage. Is that proper?

I completely consider that. I imply, in California, I believe Governor [Gavin] Newsom, simply inside the final couple months, signed 12 items of laws that had one thing to do with AI. [Again, it’s 18 by TechCrunch’s count.)] He vetoed the large invoice on AI, which was going to actually require AI corporations to take a position much more in testing and actually gradual issues down.

The truth is, I gave a chat in Sacramento yesterday to the California Cybersecurity Schooling Summit, and I talked slightly bit in regards to the laws that’s occurring throughout your entire US, all the states, and it’s like one thing like over 400 totally different items of laws on the state stage have been launched simply previously 12 months. So there’s rather a lot occurring there.

And I believe one of many large issues, it’s an enormous concern in know-how basically, and in cybersecurity, however we’re seeing it on the substitute intelligence aspect proper now, is that there’s a harmonization requirement. Harmonization is the phrase that [the Department of Homeland Security] and Harry Coker on the [Biden] White Home have been utilizing to [refer to]: How can we harmonize all of those guidelines and laws round these various things in order that we don’t have this [situation] of all people doing their very own factor, which drives corporations loopy. As a result of then they’ve to determine, how do they adjust to all these totally different legal guidelines and laws in several states?

I do suppose there’s going to be much more exercise on the state aspect, and hopefully we will harmonize these slightly bit so there’s not this very various set of laws that corporations must adjust to.

I hadn’t heard that time period, however that was going to be my subsequent query: I think about most individuals would agree that harmonization is an efficient aim, however are there mechanisms by which that’s occurring? What incentive do the states have to truly be certain that their legal guidelines and laws are in keeping with one another?

Actually, there’s not a variety of incentive to harmonize laws, besides that I can see the identical type of language popping up in several states — which to me, signifies that they’re all what one another’s doing.

However from a purely, like, “Let’s take a strategic plan method to this amongst all of the states,” that’s not going to occur, I don’t have any excessive hopes for it occurring.

Do you suppose different states would possibly kind of comply with California’s lead when it comes to the overall method?

Lots of people don’t like to listen to this, however California does type of push the envelope [in tech legislation] that helps individuals to return alongside, as a result of they do all of the heavy lifting, they do a variety of the work to do the analysis that goes into a few of that laws.

The 12 payments that Governor Newsom simply handed had been throughout the map, every little thing from pornography to utilizing information to coach web sites to all totally different sorts of issues. They’ve been fairly complete about leaning ahead there.

Though my understanding is that they handed extra focused, particular measures after which the larger regulation that acquired a lot of the consideration, Governor Newsom in the end vetoed it.

I may see each side of it. There’s the privateness element that was driving the invoice initially, however then it’s a must to think about the price of doing these items, and the necessities that it levies on synthetic intelligence corporations to be progressive. So there’s a steadiness there.

I might absolutely anticipate [in 2025] that California goes to move one thing slightly bit extra strict than than what they did [in 2024].

And your sense is that on the federal stage, there’s actually curiosity, just like the Home report that you just talked about, but it surely’s not essentially going to be as large a precedence or that we’re going to see main laws subsequent yr?

Properly, I don’t know. It relies on how a lot emphasis the [new] Congress brings in. I believe we’re going to see. I imply, you learn what I learn, and what I learn is that there’s going to be an emphasis on much less regulation. However know-how in lots of respects, actually round privateness and cybersecurity, it’s type of a bipartisan difficulty, it’s good for everyone.

I’m not an enormous fan of regulation, there’s a variety of duplication and a variety of wasted sources that occur with a lot totally different laws. However on the identical time, when the protection and safety of society is at stake, as it’s with AI, I believe there’s, there’s undoubtedly a spot for extra regulation.

You talked about it being a bipartisan difficulty. My sense is that when there’s a break up, it’s not at all times predictable — it isn’t simply all of the Republican votes versus all of the Democratic votes.

That’s an incredible level. Geography issues, whether or not we wish to admit it or not, that, and that’s why locations like California are actually being leaning ahead in a few of their laws in comparison with another states.

Clearly, that is an space that Gretel works in, but it surely looks like you consider, or the corporate believes, that as there’s extra regulation, it pushes the trade within the path of extra artificial information.

Possibly. One of many causes I’m right here is, I consider artificial information is the way forward for AI. With out information, there’s no AI, and high quality of information is turning into extra of a problem, as the pool of information — both it will get used up or shrinks. There’s going to be increasingly of a necessity for top of the range artificial information that ensures privateness and eliminates bias and takes care of all of these type of nontechnical, gentle points. We consider that artificial information is the reply to that. The truth is, I’m 100% satisfied of it.

That is much less instantly about coverage, although I believe it has kind of coverage implications, however I might love to listen to extra about what introduced you round to that viewpoint. I believe there’s other people who acknowledge the issues you’re speaking about, however consider artificial information probably amplifying no matter biases or issues had been within the authentic information, versus fixing the issue.

Positive, that’s the technical a part of the dialog. Our clients really feel like we now have solved that, and there may be this idea of the flywheel of information era — that in case you generate unhealthy information, it will get worse and worse and worse, however constructing in controls into this flywheel that validates that the info is just not getting worse, that it’s staying equally or getting higher every time the fly will comes round. That’s the issue Gretel has solved.

Many Trump-aligned figures in Silicon Valley have been warning about AI “censorship” — the varied weights and guardrails that corporations put across the content material created by generative AI. Do you suppose that’s prone to be regulated? Ought to it’s?

Concerning issues about AI censorship, the federal government has quite a lot of administrative levers they’ll pull, and when there’s a perceived threat to society, it’s nearly sure they are going to take motion.

Nevertheless, discovering that candy spot between cheap content material moderation and restrictive censorship shall be a problem. The incoming administration has been fairly clear that “much less regulation is healthier” would be the modus operandi, so whether or not by means of formal laws or government order, or much less formal means comparable to [National Institute of Standards and Technology] tips and frameworks or joint statements by way of interagency coordination, we must always anticipate some steering.

I wish to get again to this query of what good AI regulation would possibly seem like. There’s this large unfold when it comes to how individuals discuss AI, prefer it’s both going to save lots of the world or going to destroy the world, it’s probably the most superb know-how, or it’s wildly overhyped. There’s so many divergent opinions in regards to the know-how’s potential and its dangers. How can a single piece and even a number of items of AI regulation embody that?

I believe we now have to be very cautious about managing the sprawl of AI. We’ve got already seen with deepfakes and among the actually adverse points, it’s regarding to see younger youngsters now in highschool and even youthful which might be producing deep fakes which might be getting them in bother with the legislation. So I believe there’s a spot for laws that controls how individuals can use synthetic intelligence that doesn’t violate what could also be an current legislation — we create a brand new legislation that reinforces present legislation, however simply taking the AI element into it.

I believe we — these of us which were within the know-how area — all have to recollect, a variety of these items that we simply think about second nature to us, once I discuss to my relations and a few of my mates that aren’t in know-how, they actually don’t have a clue what I’m speaking about more often than not. We don’t need individuals to really feel like that large authorities is over-regulating, but it surely’s essential to speak about these items in language that non-technologists can perceive.

However however, you most likely can inform it simply from speaking to me, I’m giddy about the way forward for AI. I see a lot goodness coming. I do suppose we’re going to have a few bumpy years as individuals extra in tune with it and extra perceive it, and laws goes to have a spot there, to each let individuals perceive what AI means to them and put some guardrails up round AI.

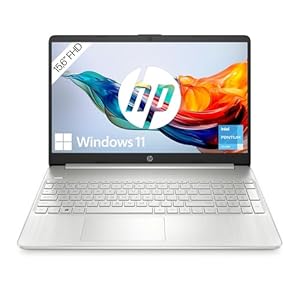

Trending Merchandise