In a brand new report, a California-based coverage group co-led by Fei-Fei Li, an AI pioneer, means that lawmakers ought to take into account AI dangers that “haven’t but been noticed on this planet” when crafting AI regulatory insurance policies.

The 41-page interim report launched on Tuesday comes from the Joint California Coverage Working Group on Frontier AI Fashions, an effort organized by Governor Gavin Newsom following his veto of California’s controversial AI safety bill, SB 1047. Whereas Newsom discovered that SB 1047 missed the mark, he acknowledged final yr the necessity for a extra in depth evaluation of AI dangers to tell legislators.

Within the report, Li, together with co-authors UC Berkeley School of Computing Dean Jennifer Chayes and Carnegie Endowment for Worldwide Peace President Mariano-Florentino Cuéllar, argue in favor of legal guidelines that will enhance transparency into what frontier AI labs reminiscent of OpenAI are constructing. Trade stakeholders from throughout the ideological spectrum reviewed the report earlier than its publication, together with staunch AI security advocates like Turing Award winner Yoshua Benjio in addition to those that argued in opposition to SB 1047, reminiscent of Databricks Co-Founder Ion Stoica.

In line with the report, the novel dangers posed by AI techniques might necessitate legal guidelines that will drive AI mannequin builders to publicly report their security exams, knowledge acquisition practices, and safety measures. The report additionally advocates for elevated requirements round third-party evaluations of those metrics and company insurance policies, along with expanded whistleblower protections for AI firm staff and contractors.

Li et al. write there’s an “inconclusive degree of proof” for AI’s potential to assist perform cyberattacks, create organic weapons, or result in different “excessive” threats. Additionally they argue, nevertheless, that AI coverage mustn’t solely handle present dangers, however anticipate future penalties which may happen with out enough safeguards.

“For instance, we don’t want to watch a nuclear weapon [exploding] to foretell reliably that it may and would trigger in depth hurt,” the report states. “If those that speculate about probably the most excessive dangers are proper — and we’re unsure if they are going to be — then the stakes and prices for inaction on frontier AI at this present second are extraordinarily excessive.”

The report recommends a two-pronged technique to spice up AI mannequin growth transparency: belief however confirm. AI mannequin builders and their staff must be offered avenues to report on areas of public concern, the report says, reminiscent of inner security testing, whereas additionally being required to submit testing claims for third-party verification.

Whereas the report, the ultimate model of which is due out in June 2025, endorses no particular laws, it’s been effectively obtained by consultants on either side of the AI policymaking debate.

Dean Ball, an AI-focused analysis fellow at George Mason College who was important of SB 1047, stated in a put up on X that the report was a promising step for California’s AI security regulation. It’s additionally a win for AI security advocates, in line with California State Senator Scott Wiener, who launched SB 1047 final yr. Wiener stated in a press launch that the report builds on “pressing conversations round AI governance we started within the legislature [in 2024].”

The report seems to align with a number of elements of SB 1047 and Wiener’s follow-up invoice, SB 53, reminiscent of requiring AI mannequin builders to report the outcomes of security exams. Taking a broader view, it appears to be a much-needed win for AI security people, whose agenda has lost ground in the last year.

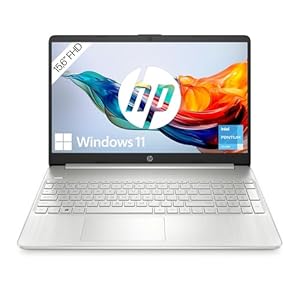

Trending Merchandise